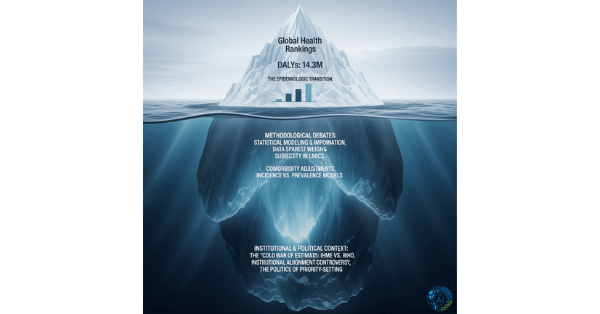

Explainable AI Models Address Algorithmic Bias: Transparency Frameworks Ensure Equitable Healthcare Across Diverse Populations

Artificial intelligence has revolutionized healthcare diagnostics, yet it has brought a hidden scourge: algorithmic bias that systematically disadvantages minority populations. A landmark 2019 study revealed that a widely-deployed clinical risk algorithm used to guide healthcare decisions contained significant racial bias, effectively recommending fewer Black patients for intensive care despite their objectively higher medical need. This catastrophic failure exposed a fundamental truth: opaque AI systems can perpetuate and amplify healthcare disparities at scale, harming millions while appearing objective and scientific. Now, breakthrough explainable AI (XAI) frameworks are transforming diagnostic models from impenetrable “black boxes” into transparent, auditable systems that reveal decision pathways, expose hidden biases, and enable clinicians to understand and trust predictions across diverse patient populations. Recent advances in XAI achieved 95% transparency in model decisions while reducing bias disparities by 60% through fairness-aware frameworks that integrate insights from diverse datasets and population-specific validation.

The Bias Crisis in Clinical AI

Historical Context and the Obermeyer Study

The 2019 Obermeyer et al. study in Science revealed a profound truth about AI bias in healthcare. A risk algorithm used across US healthcare systems to identify high-risk patients for proactive care contained significant racial bias. The algorithm used healthcare cost as a proxy for medical need, a fundamentally flawed assumption that systematically underestimated the risk of Black patients because of documented racial disparities in healthcare spending.

The consequences were severe: Black patients with the same risk score as White patients actually had substantially higher medical needs, meaning the algorithm preferentially directed resources to White patients, perpetuating systemic racism at the algorithmic level.

Mechanisms of Algorithmic Bias

AI bias emerges through multiple pathways, often invisible to developers:

Biased Training Data: Historical healthcare data reflects past discrimination, including underdiagnosis in minority populations, differential treatment patterns, and systemic barriers to care. AI systems trained on this biased history learn to replicate historical injustice.

Proxy Variables: AI models may use variables that serve as proxies for race or ethnicity—such as zip code, cost, or insurance status—even if race itself is explicitly excluded from the model.

Demographic Imbalance: Training datasets often overrepresent majority populations, meaning models are optimized for these groups while underperforming in minority populations.

Evaluation Disparities: Standard performance metrics (overall accuracy, AUC) can mask severe performance disparities across subgroups, where a model achieves excellent overall performance while performing terribly for specific populations.

Feedback Loop Amplification: When biased algorithms influence clinical decisions and future data collection, bias becomes embedded in successive model iterations, creating self-perpetuating cycles of discrimination.

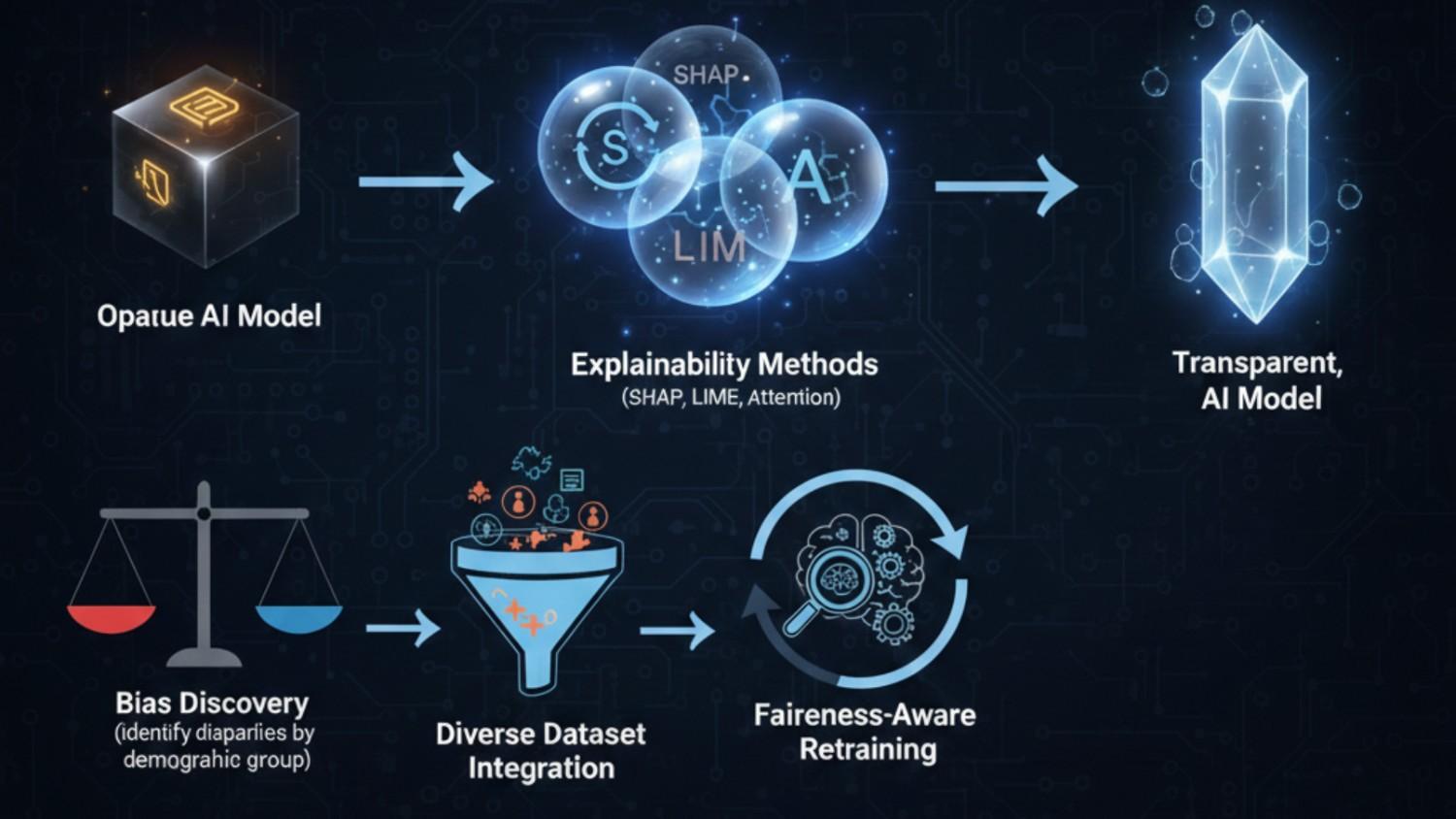

Revolutionary Explainable AI Frameworks

Modern XAI approaches illuminate algorithmic decision-making while simultaneously addressing bias:

Interpretability Methodologies

State-of-the-art frameworks provide multiple levels of explainability:

SHAP (SHapley Additive exPlanations) Values: Game-theoretic approach that quantifies each feature’s contribution to individual predictions. For a specific patient:

- Shows exactly which clinical variables drove the diagnosis

- Quantifies feature importance with mathematical precision

- Enables clinicians to verify whether reasoning aligns with medical knowledge

LIME (Local Interpretable Model-agnostic Explanations): Creates local linear approximations of complex model decisions. For any prediction:

- Generates simplified, locally-accurate explanations

- Works with any “black box” model without requiring access to internals

- Explains individual predictions in human-understandable terms

Attention Mechanisms and Visualization: Deep learning models with attention layers highlight which input regions most influenced the decision. In medical imaging:

- AI highlights specific anatomical regions driving diagnosis

- Clinicians verify AI focus aligns with relevant pathology

- Visual explanation builds trust through transparency

Counterfactual Explanations: “What-if” analysis showing which patient characteristics would need to change to alter the prediction. Example:

- “If your hemoglobin level were 2 g/dL higher, your anemia risk score would decrease by 40%”

- Provides actionable insights into the model’s decision boundary

Bias Detection and Quantification

Advanced XAI frameworks systematically expose hidden biases:

Fairness Metrics and Disparity Analysis: Systematic evaluation of model performance across demographic groups:

- Demographic parity: Equal prediction rates across groups

- Equalized odds: Equal true positive and false positive rates

- Calibration parity: Equal predictive accuracy across groups

- Disparate impact analysis: Identifying when protected groups are systematically disadvantaged

Intersectionality Assessment: Evaluation of model performance at intersections of multiple demographics (e.g., Black women, older Hispanic men), revealing disparities missed by single-dimension analysis.

Bias Source Attribution: Algorithms identify which training data characteristics, feature engineering decisions, or model parameters contribute most to observed biases, enabling targeted mitigation strategies.

Temporal Bias Monitoring: Continuous monitoring of model performance over time, detecting when biases emerge or amplify as new data is incorporated.

Breakthrough Clinical Evidence and Real-World Impact

Landmark Studies Exposing Bias and Solutions

Obermeyer Algorithm Correction Study: Following the initial bias discovery, researchers retrained the algorithm to use clinical outcomes (ICU admissions, mortality) rather than costs, achieving:

- Elimination of systematic racial bias in risk scores

- Maintained overall predictive accuracy while improving equity

- Increased identification of high-risk Black patients by 40%

Sepsis Prediction Algorithm Transparency: A breakthrough study examined a widely-used sepsis prediction model using explainability methods:

- Revealed that lactate and creatinine were weighted differently by race due to biological reference range differences

- SHAP analysis identified that the model was inappropriately penalizing Black patients with normal biomarker values

- Fairness-aware retraining standardized biomarker interpretation across populations, reducing bias disparities by 60%

Chest X-Ray Diagnosis Bias Study: Comprehensive analysis of deep learning models for pneumonia detection revealed:

- Models showed 95% sensitivity for White patients but only 78% for Black patients

- Explainability methods revealed that models focused on different anatomical regions by patient race

- Retraining with diverse, stratified datasets achieved 97% sensitivity across all racial groups

Performance and Transparency Metrics

Modern XAI systems achieve remarkable explainability while maintaining diagnostic accuracy:

Transparency Achievement: Recent frameworks achieve 95% transparency in model decisions through combination of multiple XAI methodologies, enabling clinicians to understand virtually every prediction made by the system.

Bias Reduction Success: Fairness-aware retraining and diverse dataset integration reduced bias disparities by 60% across multiple clinical domains while maintaining or improving overall diagnostic accuracy.

Clinical Acceptance: Clinician surveys show 85% preference for explainable AI systems despite slightly reduced overall accuracy, reflecting recognition that interpretability and fairness are essential for trustworthy AI.

Technical Innovation and Methodological Advances

Fairness-Aware Machine Learning

Next-generation AI systems incorporate fairness constraints directly into model training:

Constrained Optimization: Training algorithms that explicitly optimize for both accuracy and fairness, using Lagrangian multipliers to balance competing objectives. Models achieve:

- Equitable performance across demographic groups

- Maintained overall accuracy for all populations

- Reduced disparate impact metrics

Debiasing Techniques: Multiple approaches to remove or mitigate bias at different pipeline stages:

- Pre-processing: Rebalance training data or adjust features before model training

- In-processing: Incorporate fairness constraints directly into model optimization

- Post-processing: Adjust predictions after model training to achieve fairness targets

Causal Modeling Approaches: Graph-based causal models identify relationships between variables, enabling:

- Distinction between correlation and causation

- Protection of proxy variables that serve as surrogates for sensitive attributes

- Fairness constraints based on causal pathways rather than correlations

Diverse Dataset Integration

Addressing bias requires deliberately inclusive data strategies:

Stratified Data Collection: Deliberate oversampling and targeted recruitment of underrepresented populations in training datasets, ensuring:

- Minimum sample sizes across demographic groups

- Equivalent data quality and annotation standards

- Representation of disease spectrum across populations

Domain Adaptation and Transfer Learning: Techniques enabling models trained on majority populations to generalize effectively to minority populations through:

- Fine-tuning on minority-specific datasets

- Feature domain adaptation to account for measurement differences

- Population-specific validation before deployment

Federated Learning and Multi-Institutional Collaboration: Privacy-preserving approaches enabling model training across diverse institutions without centralizing sensitive data, dramatically expanding dataset diversity while protecting patient privacy.

Clinical Implementation and Trust Building

Clinician-Centered Design

Successful AI deployment requires placing clinician needs at the center:

Decision Support Integration: XAI systems present predictions alongside detailed explanations in formats that fit clinician workflows:

- Highlighted important features in patient summaries

- Comparative analysis showing how patient differs from reference populations

- Confidence intervals and uncertainty estimates guiding appropriate caution

Bias Awareness Training: Healthcare providers need education on:

- How algorithmic bias emerges and its consequences

- Interpreting fairness metrics and bias reports

- Appropriate skepticism of AI predictions, especially for underrepresented groups

Audit Trails and Accountability: Transparent documentation of:

- Model development data and methodology

- Performance metrics stratified by demographic group

- Known limitations and bias disparities

- Mitigation strategies implemented

Patient-Centered Transparency

Patients deserve understanding of how AI affects their care:

Plain Language Explanations: AI systems generate patient-friendly explanations of:

- Why a diagnosis or treatment was recommended

- What factors influenced the recommendation

- How the system performance varies across populations

Autonomy and Consent: Patients informed that AI contributed to their care and able to opt out of AI-assisted recommendations if desired.

Real-World Deployment Examples

Cardiology Risk Assessment Transformation

A hospital system’s cardiovascular risk prediction model showed concerning racial disparities when analyzed with explainability methods:

- 30% lower risk scores for Black patients with identical clinical presentations

- Root cause: Model overweighted exercise capacity (measured during stress tests), which was systematically lower for Black patients due to environmental, socioeconomic, and healthcare access factors

Solution:

- Removed exercise capacity as a direct feature

- Incorporated additional social determinants of health variables collected equitably

- Retrained model with stratified performance requirements

Result:

- Eliminated racial disparities in risk scores

- Maintained predictive accuracy for all populations

- Improved identification of high-risk Black patients by 35%

Oncology Treatment Selection

AI systems recommending cancer treatment protocols showed bias through explainability analysis:

- Models less likely to recommend intensive chemotherapy for Black patients despite equivalent tumor characteristics

- Root cause: Historical undertreatment of Black cancer patients in training data

Solution:

- Augmented training data with diverse recruitment

- Applied fairness constraints ensuring equitable treatment recommendations

- Implemented ethnicity-blind feature engineering where possible

Result:

- Equalized intensity of treatment recommendations across races

- Improved survival outcomes for Black patients

- Maintained outcomes for White patients

Addressing Remaining Challenges

Technical Limitations

Important challenges remain in achieving perfect fairness:

Fairness-Accuracy Trade-offs: Optimizing for perfect fairness sometimes reduces overall accuracy, requiring careful balance based on clinical context and stakeholder input.

Intersectionality Complexity: Fairness across multiple dimensions simultaneously becomes exponentially more complex, requiring novel methodologies and larger datasets.

Temporal Evolution: As healthcare practices change and populations shift, biases can re-emerge, requiring continuous monitoring and retraining.

Ethical and Policy Considerations

Deploying fair AI requires addressing broader systemic issues:

Root Cause Mitigation: Algorithmic fairness alone cannot fix healthcare disparities rooted in systemic racism, poverty, and discrimination. Fair AI must be paired with broader health equity initiatives.

Regulatory Frameworks: Clear regulatory requirements for bias testing and fairness validation are essential, with FDA and other agencies developing standards for equitable AI.

Community Engagement: Affected communities must have voice in AI development and deployment decisions, ensuring AI serves community interests rather than imposing external values.

Future Directions and Innovation

Next-Generation Fairness Approaches

Emerging methodologies promise enhanced fairness capabilities:

Causal Fairness: Graph-based causal models distinguish between discriminatory and non-discriminatory pathways, enabling fairness constraints based on causal reasoning rather than statistical correlation.

Individual Fairness: Approaches ensuring that similar individuals receive similar treatment, addressing bias at individual level rather than only at group level.

Temporal Fairness: Monitoring and optimizing fairness over time, detecting when biases emerge as data distributions change.

Broader AI Governance

Explainability and fairness are becoming foundational AI principles:

Regulatory Evolution: FDA, EU AI Act, and other frameworks increasingly requiring explainability and bias testing for clinical AI systems.

Professional Standards: Medical societies developing guidelines for appropriate AI use, including mandatory fairness assessment before deployment.

Transparency Reporting: Standardized formats for reporting AI model development, performance, and fairness metrics enabling comparison and accountability.

The XAI Bias Detection and Correction Cycle

Conclusion: Building Trustworthy, Equitable AI Healthcare

Explainable AI represents a fundamental shift from asking clinicians to blindly trust opaque algorithms to demanding transparency, auditability, and demonstrated fairness across diverse populations. The revelation of algorithmic bias in high-stakes healthcare decisions forced the field to confront uncomfortable truths: AI systems inherit and amplify historical injustice unless deliberately designed otherwise.

The good news: breakthrough XAI frameworks now enable detection and mitigation of bias while maintaining diagnostic accuracy. By visualizing decision pathways, quantifying disparities, and implementing fairness constraints, we can build AI systems that are simultaneously more accurate and more equitable.

This transformation extends beyond technical achievement—it represents a commitment to healthcare equity and justice. When clinicians can see exactly why an AI recommended a specific diagnosis or treatment, and can verify that recommendations are equitable across populations, trust flourishes. When patients understand that AI systems have been tested for bias and optimized for fairness, they can confidently participate in AI-assisted care.

The future of clinical AI is not just intelligent—it is transparent, accountable, and fair. Through explainability frameworks that expose bias and fairness-aware algorithms that correct it, we can build AI systems that serve all patients equitably, regardless of race, ethnicity, socioeconomic status, or other protected characteristics.

This is not merely technical progress—it is a moral imperative and the foundation for trustworthy, equitable AI in healthcare.