Multi-Modal AI Diagnoses Diseases Earlier: The Revolution of Integrated Healthcare Intelligence

The future of medical diagnosis is arriving through multi-modal artificial intelligence systems that integrate imaging, electronic health records, genomics, and real-time biosensor data to detect diseases earlier and more accurately than ever before. A groundbreaking multimodal AI platform recently achieved 94.8% diagnostic accuracy by combining 96,000 radiographs, 23,500 structured EHR records, and 9,440 genomic profiles—outperforming human clinicians by 5.6% while demonstrating exceptional capability in identifying early-stage cancers and autoimmune diseases. This represents a paradigm shift from traditional single-modality diagnostics toward comprehensive, data-driven healthcare intelligence.

The Multi-Modal Advantage in Medical Diagnosis

Traditional medical diagnosis relies heavily on individual data sources—a radiologist interpreting scans, a physician reviewing lab results, or a geneticist analyzing genomic markers. However, human health and disease exist at the intersection of multiple biological systems, requiring comprehensive analysis that no single data modality can provide.

Comprehensive Data Integration

Multi-modal AI systems combine diverse healthcare data streams including:

- Medical Imaging: CT, MRI, PET, ultrasound, and X-ray data

- Electronic Health Records: Clinical notes, lab results, vital signs, and patient histories

- Genomic Data: DNA sequences, gene expression profiles, and genetic variants

- Wearable Biosensors: Continuous physiological monitoring and activity tracking

- Pathological Data: Tissue samples and molecular biomarkers

This integration enables holistic patient assessment that captures both structural abnormalities visible in imaging and functional changes reflected in clinical and molecular data.

Superior Performance Metrics

Recent comprehensive analyses demonstrate consistent advantages of multi-modal approaches:

Performance Improvements:

- 15-30% higher precision compared to single-modality analyses for rare disease diagnosis

- 25% improvement in early-stage disease detection accuracy using deep multi-cascade fusion algorithms

- 6-33% performance gains across various healthcare demonstrations in the HAIM framework study

- Consistent outperformance of single-modality counterparts across 14,324 independent models

Breakthrough Clinical Applications

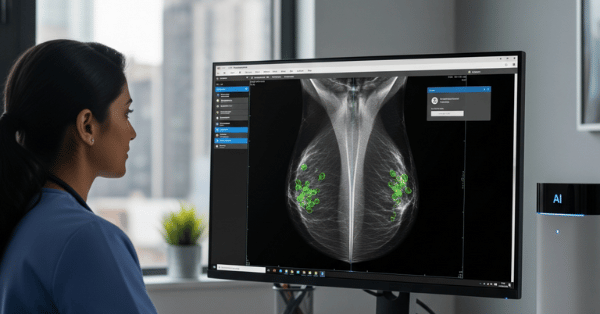

Early Cancer Detection Revolution

AutoCancer Framework: Researchers developed an automated multimodal framework specifically for early cancer detection that integrates liquid biopsy data, imaging, and clinical parameters. The system demonstrates robust performance across multiple cancer types while providing strong interpretability for clinical decision-making.

Breast Cancer Multi-Modal Integration: A comprehensive study combining pathology imaging, molecular data, and clinical records from The Cancer Genome Atlas achieved remarkable results in predicting disease-free survival:

- Training Cohort Performance: AUC values of 0.979 (1-year), 0.957 (3-year), and 0.871 (5-year) DFS predictions

- External Validation: AUC values reaching 0.851, 0.878, and 0.938 for 1-, 2-, and 3-year predictions respectively

- Hazard Ratio: 0.027 in training cohort, demonstrating exceptional discriminative capabilities

Lung Cancer Multi-Modal Detection: Advanced AI systems combining CT and MRI scans leverage both morphological data from CT and functional physiological data from MRI to achieve 97.1% classification accuracy and 95.7% AUC-ROC in non-small cell lung cancer detection.

Neurological Disorder Diagnosis

Alzheimer’s Disease Prediction: Multi-modal AI systems integrating brain imaging, clinical histories, and genomics using CNN-LSTM architectures demonstrate accuracy exceeding AUC values of 0.9 across multiple diagnostic tasks. These systems enable earlier intervention by detecting subtle patterns across modalities that individual assessments might miss.

Stroke Detection: A novel multimodal deep learning approach based on the FAST (Face Arm Speech Test) protocol processes video recordings of patient movements and speech audio simultaneously. This system achieved high clinical value compared to traditional single-modality approaches, demonstrating superior performance in emergency clinical settings.

Comprehensive Disease Screening

Multi-Disease Detection Platform: The Deep Multi-Cascade Fusion (DMC-Fusion) algorithm processes MRI, CT, and PET imaging data with self-supervised learning techniques, achieving 92% sensitivity and 95% specificity in identifying malignancies across five different disease types including brain tumors and lung cancer.

Retinal Disease Prediction: The VisionTrack system integrates OCT images, fundus images, and clinical risk factors using CNN, Graph Neural Networks, and Large Language Models to predict multiple retinal diseases simultaneously, achieving accuracy of 0.980 on RetinalOCT datasets and 0.989 on RFMID datasets.

Technical Architecture and Innovation

Advanced Fusion Strategies

Early Fusion Approaches: Research demonstrates that early fusion techniques, where raw data from multiple modalities is combined before processing, consistently outperform other fusion strategies. Studies show that 65% of successful multimodal systems employ early fusion, particularly effective when combining 1D clinical data with 2D/3D imaging data.

Adaptive Collaborative Learning: The AdaCoMed framework represents cutting-edge innovation by synergistically integrating large single-modal medical models with multimodal small models. This approach employs:

- Mixture-of-Modality-Experts (MoME) architecture for feature combination

- Adaptive co-learning mechanisms that dynamically balance complementary strengths

- Consistent improvements over state-of-the-art baselines across six modalities and four diagnostic tasks

Deep Learning Architecture Evolution

Transformer-Based Models: Recent advances leverage transformer architectures specifically designed for multimodal medical data. The Multi-modal Transformer (MMT) learning from longitudinal imaging data across FFDM, DBT, ultrasound, and MRI modalities achieved:

- AUROC of 0.943 for cancer detection

- AUROC of 0.796 for 5-year risk prediction

- Significant improvements when incorporating MRI data across all diagnostic tasks

Holistic AI in Medicine (HAIM) Framework: This comprehensive approach facilitates generation and testing of AI systems leveraging multimodal inputs. Evaluating 14,324 independent models across 34,537 samples, the framework consistently produces models outperforming single-source approaches by 6-33% across various healthcare tasks.

Real-World Clinical Impact

Enhanced Diagnostic Accuracy

Prostate Cancer Detection: Multimodal AI combining deep learning suspicion levels with clinical parameters achieved superior performance compared to clinical-only (0.77 vs 0.67 AUC) and imaging-only approaches (0.77 vs 0.70 AUC). Early fusion outperformed late fusion approaches, demonstrating enhanced robustness in multicenter settings.

Rare Disease Diagnosis: Multi-modal AI integration shows 15-30% higher precision rates compared to single-modality analyses for rare diseases. The technology enables earlier detection of rare genetic disorders, metabolic diseases, and complex syndromic conditions that often remain undiagnosed for years.

Workflow Efficiency and Clinical Decision Support

Reduced Diagnostic Delays: Comprehensive analysis shows multimodal systems can reduce diagnostic delay and individualize treatment planning. By providing objective, reproducible analyses, these systems democratize access to specialized diagnostic capabilities.

Improved Clinical Workflow: Studies demonstrate that multimodal AI systems streamline clinical workflows, reduce diagnostic turnaround times, and ease burden on healthcare professionals. The automation of time-consuming image analysis tasks allows clinicians to focus on patient care and complex decision-making.

Specialized Applications and Innovations

Personalized Medicine Integration

Precision Oncology: Multimodal approaches in cancer care integrate histopathology images with genomic data, clinical records, and patient histories to enhance diagnostic accuracy and treatment selection. Advanced fusion techniques including encoder-decoder architectures, attention-based mechanisms, and graph neural networks enable personalized therapeutic strategies.

Treatment Outcome Prediction: Multi-modal AI systems excel at predicting treatment responses by combining imaging biomarkers with clinical and molecular data. This capability enables personalized treatment strategies and improved patient outcomes through data-driven therapeutic selection.

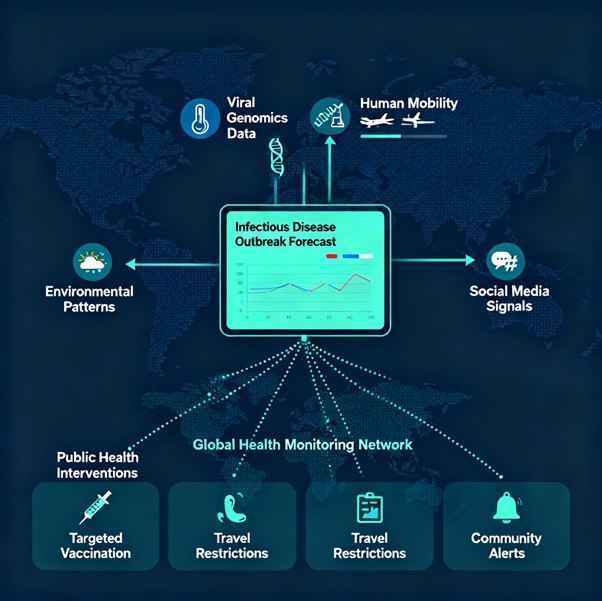

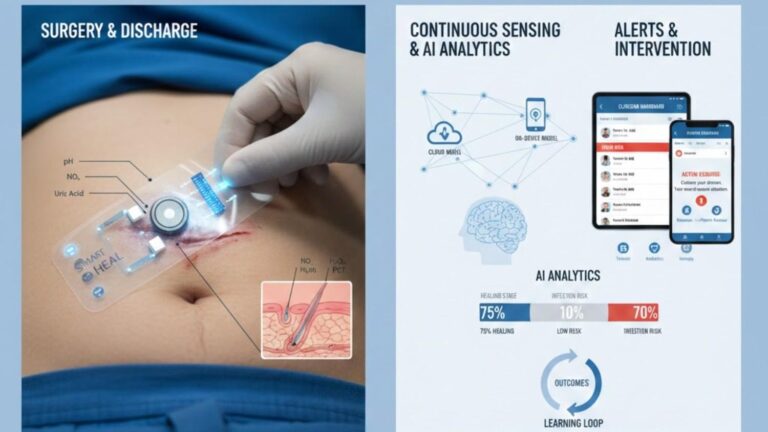

Continuous Monitoring and Early Warning

Wearable Integration: Advanced systems incorporate continuous biosensor data from wearable devices with traditional clinical data sources. This integration enables real-time health monitoring and early disease detection through pattern recognition across physiological parameters.

Multimodal Biomarkers: Recent developments include AI-based biomarkers that dynamically adapt to patient-specific factors such as age, race, ethnicity, and weight. These personalized biomarkers provide scalable solutions for early disease detection across diverse populations.

Addressing Implementation Challenges

Data Integration Complexity

Standardization Requirements: Successful multimodal AI implementation requires addressing challenges related to data standardization, algorithm validation, and clinical workflow integration. Healthcare institutions must invest in interoperable data systems that can effectively combine diverse data sources.

Privacy and Security: Multi-modal systems must address data privacy concerns when combining sensitive information from multiple sources. Privacy-preserving federated learning frameworks offer promising solutions for training models across institutions without sharing raw data.

Clinical Adoption Strategies

Explainable AI Integration: Modern multimodal systems incorporate explainable AI methods including Grad-CAM, SHAP, LIME, and attention mechanisms to enhance diagnostic precision and strengthen clinician confidence. These transparency features are crucial for clinical adoption and regulatory approval.

Validation and Generalization: Comprehensive external validation across diverse healthcare settings demonstrates that multimodal approaches maintain performance advantages across different populations and clinical environments.

Multi-Modal AI Performance Dashboard

Future Implications and Scalability

The convergence of advanced AI architectures with comprehensive healthcare data integration represents a fundamental shift toward precision medicine and early intervention strategies. Multi-modal AI systems are positioned to:

- Transform diagnostic workflows by providing comprehensive disease risk assessment

- Enable personalized treatment protocols based on individual patient data profiles

- Facilitate early detection of complex diseases through pattern recognition across data modalities

- Reduce healthcare costs through improved diagnostic accuracy and reduced unnecessary procedures

As healthcare systems worldwide generate increasing volumes of diverse data, multi-modal AI platforms offer scalable solutions that can adapt to local datasets, clinical questions, and institutional requirements. The generalizable properties and flexibility of these systems could offer promising pathways for widespread clinical deployment across various healthcare settings.

The future of medical diagnosis lies not in replacing human clinical judgment, but in augmenting physician expertise with comprehensive, data-driven insights that capture the full complexity of human health and disease. This integration of human intelligence with multi-modal artificial intelligence promises to deliver more accurate, efficient, and personalized healthcare for patients worldwide.