AI-Optimized Clinical Trial Design: How Predictive Models Cut Timelines and Costs for New Therapies

Clinical research is at a paradoxical moment. Science is generating more promising therapies than ever—especially in oncology—but trials remain slow, expensive, and hard to enroll. Median clinical trial costs can exceed tens to hundreds of millions of dollars per asset, with delays in accrual and protocol amendments adding months or years to timelines. Every month lost is a month patients wait for potentially life-extending treatments.

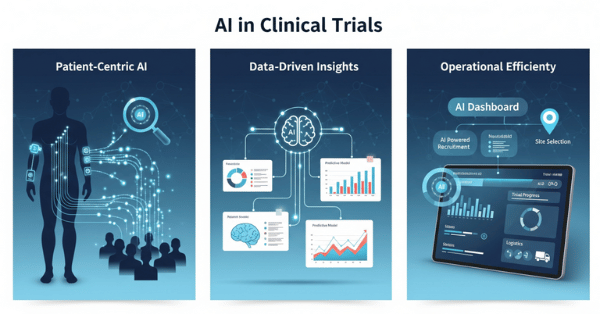

AI-optimized clinical trial design is changing this equation. By simulating enrollment, site performance, and outcome scenarios before a protocol ever goes live, predictive models allow sponsors and community research programs to “flight test” a trial on data instead of on patients. Across portfolios, leading biopharma companies report timeline reductions of up to 30% and six to twelve months of acceleration per asset when AI and ML are systematically applied to design, planning, and operations.

For community oncology—where ACCC members are on the front lines of cancer research—these tools are particularly powerful. They can help design studies that are feasible in real-world settings, more inclusive, and better aligned with the patients actually seen in community clinics.

Why Traditional Trial Design Falls Short

Classically, trial design is driven by expert opinion, limited feasibility data, and static assumptions:

- Overly restrictive eligibility criteria shrink the pool of eligible patients and slow accrual.

- Optimistic enrollment forecasts lead to missed milestones and repeated rescue amendments.

- Underpowered or overpowered sample sizes either risk inconclusive results or waste resources.

- Sites are selected based on relationships and historical “feel” rather than hard performance data.

The result: 40–50% of trials require at least one substantial amendment, many due to flawed initial design assumptions, and a high fraction terminate early for poor accrual or operational issues.

AI and ML flip this paradigm from “design by assumption” to “design by simulation.”

How Predictive Models Optimize Trial Design

1. Simulating Eligibility Criteria and Patient Pools

One of the most powerful applications of AI in design is using real-world data to test and refine inclusion and exclusion criteria before first-patient-in.

Tools like Trial Pathfinder ingest large volumes of de-identified EHR and claims data from prior oncology patients to build “virtual” trial cohorts.

- The model applies the planned eligibility criteria to real patient histories.

- It estimates how many patients at participating centers would qualify.

- It can then iteratively “relax” individual criteria (e.g., a creatinine cutoff, a specific comorbidity exclusion) and simulate:

- How much the eligible pool grows.

- Whether relaxing that rule materially changes overall survival curves, hazard ratios, or safety risks.

In landmark work, Trial Pathfinder and related approaches showed that broadening criteria based on data could double the eligible patient pool on average, without meaningfully changing hazard ratios for overall survival—meaning many exclusions were unnecessarily restrictive.

For community oncology, this is especially important: many traditional criteria were written around large academic centers, unintentionally filtering out patients with common real-world comorbidities seen in community practice. AI makes it possible to design studies that protect safety but are also realistic and inclusive.

2. Enrollment and Duration Forecasting

Even with better eligibility, planning accrual realistically is difficult. That’s where predictive models such as TrialDura come in.

- TrialDura uses a hierarchical attention transformer over multimodal inputs—disease area, intervention type, phase, region, eligibility complexity—to predict likely trial duration and enrollment speed.

- Trained on thousands of historical trials, it achieved mean absolute error of ~1.0 year and RMSE of ~1.39 years in trial duration prediction, outperforming simpler baselines.

In parallel, commercial and open tools use ML to:

- Rank sites by probability of high enrollment based on past performance, patient mix, and operational metrics.

- Estimate screen fail rates and drop-out rates for a proposed protocol.

- Simulate multiple “what-if” scenarios:

- Fewer sites with higher expected performance vs more sites with lower performance.

- Different geographic mixes to improve diversity.

- Decentralized elements (e.g., telehealth visits, local labs) to boost participation.

McKinsey and others report that, across portfolios, AI/ML-enhanced site and country selection has improved identification of top-enrolling sites by 30–50% and accelerated enrollment by 10–15%, contributing to overall timeline compression of up to 30%.

For sponsors and ACCC-member programs alike, that can be the difference between a trial that languishes and one that reaches its endpoints on time.

3. Predicting Early Termination and Design Risk

A 2023 study proposed a machine learning pipeline to predict the probability of early trial termination using public registry data from ClinicalTrials.gov.

- Features included sponsor type, phase, therapeutic area, inclusion complexity, geography, and more.

- Interpretable models (e.g., gradient boosting with SHAP explanations) identified key risk drivers, such as:

- Overly narrow indications.

- Under-resourced geographies.

- Complex, burdensome visit schedules.

The goal is not to replace human judgment, but to flag designs at high risk of failure before launch, so teams can:

- Simplify visit schedules or reduce assessment burden.

- Adjust endpoints or sample size.

- Reconsider site mix or operational model.

In oncology, where patient populations are often small and every eligible patient matters, avoiding a preventable early-terminated trial is a major win—for both sponsors and communities.

Beyond Spreadsheets: In Silico Trials and Digital Twins

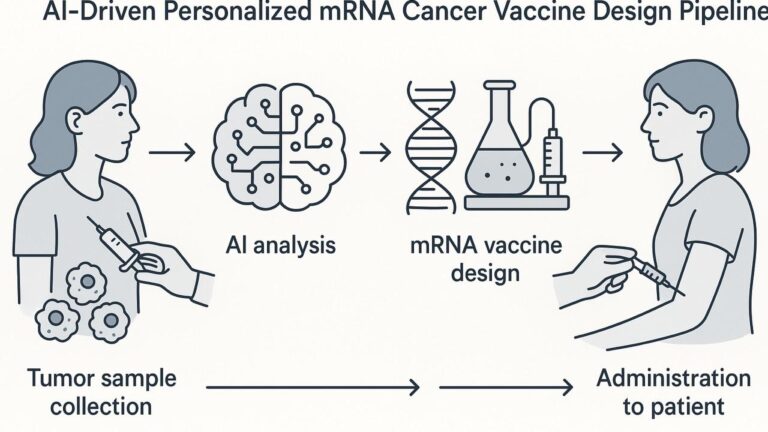

A broader vision is emerging around in silico clinical trials, where AI-enabled simulations complement or partially replace traditional control arms.

- Virtual cohorts are built from large real-world datasets and historical controls.

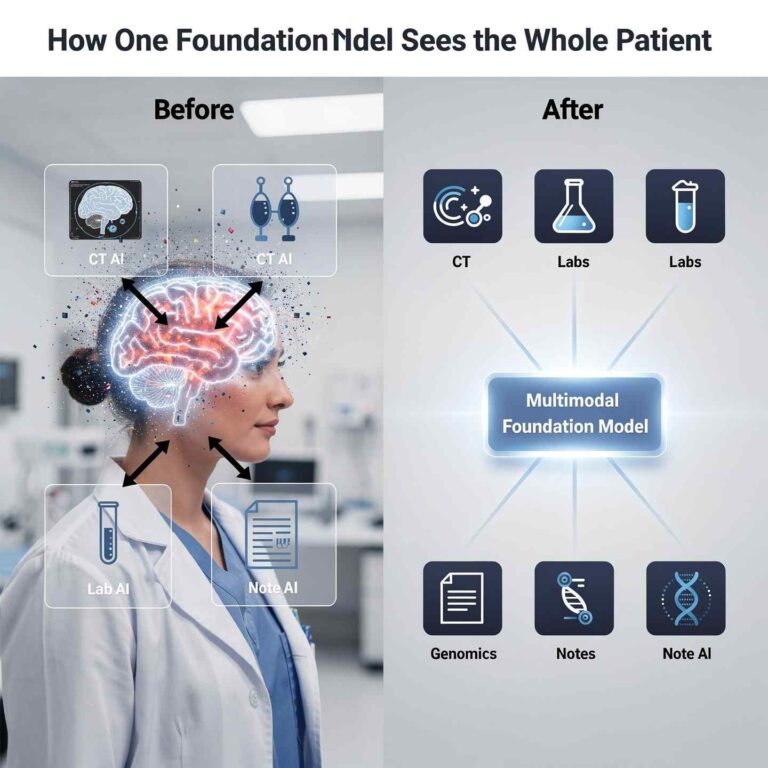

- Digital twins—patient-specific computational models combining imaging, genomics, and longitudinal data—can simulate individual trajectories under different treatments.

- These models can help:

- Refine inclusion criteria and endpoints.

- Estimate expected effect sizes more accurately.

- In some settings, reduce the required size of placebo/control groups.

In oncology and dementia research, early work shows digital twins can predict survival or disease progression trajectories at the individual level, supporting both trial design and adaptive decision-making.

Regulatory frameworks are still evolving, but the direction is clear: simulation will increasingly sit alongside traditional statistics as a core design tool.

Where AI Meets Community Oncology and ACCC

The Association of Community Cancer Centers (ACCC) and its Community Oncology Research Institute (ACORI) have emphasized that trial design must reflect real-world patient populations and practice realities.

AI-enabled design directly supports several ACCC priorities:

- More representative eligibility: Using real-world and community data to ensure criteria don’t inadvertently exclude patients with common comorbidities or social risk factors.

- Feasible protocols: Simulation of visit schedules, lab requirements, and procedures to reduce site and patient burden.

- Faster first-patient-in (FPI): AI-assisted feasibility and reverse matching (trial-to-patient search) shorten the gap between trial activation and first enrollment.

- Diversity by design: AI can help identify geographies and sites that serve underrepresented populations and simulate whether eligibility and operations will realistically allow these patients to enroll.

CANCER BUZZ and ACCC Buzz have already highlighted how AI tools (like PRISM and others) are being piloted to match patients to trials and shorten activation-to-enrollment timelines in community settings. Extending this same mindset “upstream” into design makes trials more community-ready from day one.

Cost and Productivity Impact

Timelines and cost are tightly coupled:

- A Nature Digital Medicine–cited analysis found that AI-powered patient recruitment alone could cut trial costs by ~70% and expedite timelines by up to 40% in some settings, largely through better targeting and fewer failed screens.

- McKinsey reports that combining classical AI/ML with gen AI for trial design, site selection, and operational decision-making has:

Even conservative scenarios—30% faster timelines and fewer amendments—translate into:

- Earlier regulatory submissions.

- Lower burn per asset.

- Faster patient access to new therapies.

- Higher net present value (NPV) for the portfolio.

Practical Limitations and Guardrails

Despite the promise, several cautions are essential:

- Data bias: If historical data under-represent certain populations or practice settings, AI-optimized designs can inadvertently “bake in” the same inequities. Using diverse, community-based data and explicitly auditing outputs for fairness is critical.

- Overfitting to history: Past accrual problems may reflect outdated protocols or pre–COVID patterns. Models must be regularly retrained and stress-tested.

- Regulatory expectations: In silico evidence and AI-derived design assumptions must be transparent, explainable, and aligned with FDA/EMA guidance.

- Human oversight: As ACORI and ACCC leaders emphasize, AI tools should augment—not replace—trial designers, investigators, and research staff.

Human-in-the-loop review—where oncology experts review AI-simulated scenarios, validate feasibility, and incorporate local context—is non-negotiable.

Looking Ahead: Gen AI Copilots for Trial Designers

Generative AI adds another layer: protocol and document copilots.

Recent work shows that LLMs fine-tuned on trial protocols can:

- Auto-draft initial protocols and informed consent forms based on high-level design objectives.

- Propose alternative designs (e.g., adaptive arms, enrichment strategies) with pros and cons.

- Generate “what-if” scenarios that combine scientific, operational, and diversity goals.

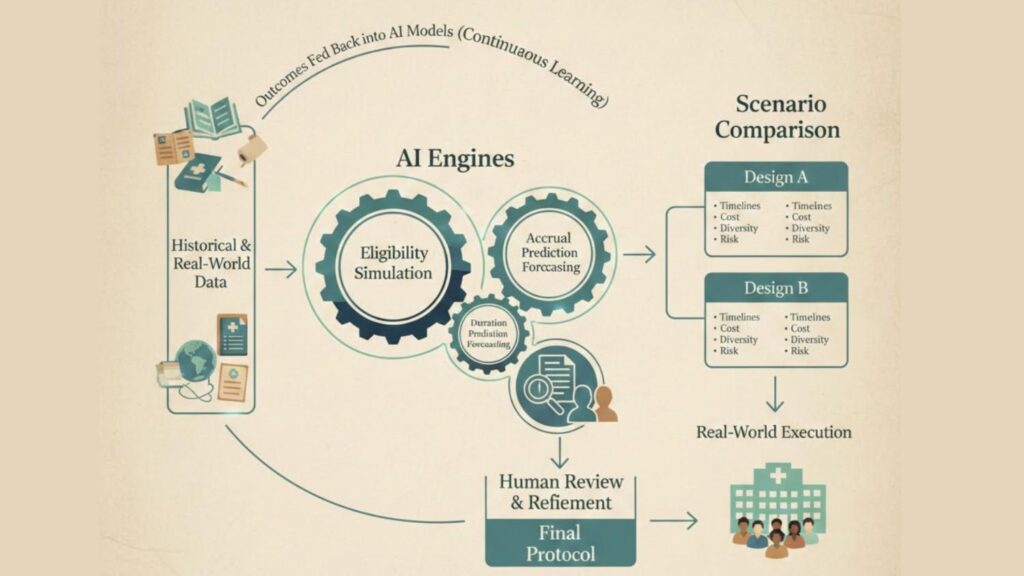

Paired with predictive engines (for accrual, outcomes, and duration), this creates an iterative design loop:

- Set objectives and constraints (effect size, safety, population, diversity, budget).

- Let the AI propose candidate designs with predicted timelines, accrual profiles, and risks.

- Have human experts refine, reject, or combine options.

- Lock a protocol that’s been stress-tested in silico instead of in the real world.

For ACCC member programs and community oncology leaders, these copilots can support local protocol adaptations, cooperative group trials, and investigator-initiated studies in ways that were previously out of reach.

AI-Optimized Trial Design Loop

Conclusion: From Best Guess to Best Simulation

Clinical trials will always involve uncertainty. But they no longer have to be built on educated guesswork alone.

AI-optimized clinical trial design moves the hardest questions—“Will we enroll?”, “Is this feasible in the community?”, “Are we excluding too many patients?”—upstream into a simulated environment. Instead of discovering flaws after a protocol opens, teams can discover and fix them before activation.

For oncology, and especially for community practices represented by ACCC, this shift means:

- Faster, more feasible trials that fit into real-world workflows.

- Broader, more diverse participation, because criteria and operations are tested against the populations actually seen in clinic.

- Lower cost and higher probability of success, giving more promising therapies a real chance to reach patients.

In an era where scientific discovery is outpacing our ability to test it, AI-optimized design is not a luxury—it is becoming a core capability for any organization serious about bringing new treatments to patients quickly, equitably, and sustainably.